No Longer Reading

A blog by Kevin McCall

The real AI agenda

The statistical model of ability vs. the unpredictability of genius

As education has expanded and the opportunities for intellectual accomplishment has increased, the great minds of the past can still hold their own. They had less knowledge than we do now, but in terms of intelligence, creativity, and in particular insight, they are as good or better than anyone working today.

And if you think about it, this is quite surprising. Not only do we have a much larger population than existed prior to the twentieth century, with a far higher proportion of the population literate, but there are also many more people who do intellectual work either professionally or in their leisure time.

If it is assumed that ability is evenly distributed throughout all populations, then for the reasons listed, we should have far more ability to draw upon. And thus, we should have completely surpassed the past. And certainly in some areas we have. But then why are some of the greats of the past still great? Consider Dante, Virgil, and Homer. William James Tychonievich has referred to them as the "three greatest writers who ever lived". I have not read enough to make that assesment but what I would say is that they are not only better than the vast majority of writers working today, but much better.

Why should it be that one of the small number of literate people from a society with a small poulation should be so much better than the far greater number of writers working today?

One reason of course is that the modern environment is not conducive to producing great literature; modern culture has gone so far off the rails that it does not provide promising raw material. Especially for areas like literature, talent alone does not suffice, one's life experiences are also important.

But even in mathematics and areas of science that are less dependent upon life experience, many of the past masters are still as good as anyone working now. And it is even more surprising when we consider that athletic records have been regularly broken and yet intellectual accomplishments still stand.

I do not think there is only one answer. There are a variety of reasons that could be involved: one is a lack of motivation: people in the past frequently considered intellectual accomplishment as having some sort of transcendental value, now it is just viewed as a career. Another reason could be decreasing intelligence or creativity. But perhaps the most important reason is that geniuses appear when and where they appear and this is not easily predictable.

How tall was Zacchaeus?

Zacchaeus the tax collector is introduced in the book of Luke, chapter 19:

"And entering in, He walked through Jericho. And behold, there was a man named Zacchaeus, who was the chief of the publicans, and he was rich. And he sought to see Jesus who He was, and he could not for the crowd, because he was low of stature. And running before, he climed up into a sycamore tree, that he might see him; for he was to pass that way. And when Jesus was come to the place, looking up, He saw him, and said to him: Zacchaeus, make haste and come down; for this day I must abide in thy house." (Luke 19:1-5).

A few months ago, I was pondering the question of how tall was Zacchaeus? Of course, it's not something we can ever know with any degree of certainty. But on the other hand, it's not beyond all speculation.

The first thing to note is that Zacchaeus was noticeably short, so the question is, how short (or tall) does someone have to be for it to be notable? This is largely a statistical question. Height has been found to be normally distributed and in the normal distribution, the mean height is also the modal and the median height. So, one way to phrase this question is, how much does someone's height have to deviate from the mean to be noticeable?

In the present time, a man who is 6 ft (about 183 cm) tall is considered noticeably tall. It is hard to find the average height worldwide, but at least in English-speaking countries, 6 ft is roughly three inches (7.62 cm) or one standard deviation above the mean height, i.e., at the 84th percentile. So, likewise, we may say that one standard deviation below or 5 ft 6 inches (167.64 cm) is noticeably short in English-speaking countries in the present time.

So, then the question is, how tall were people in Palestine 2,000 years ago? Probably shorter than people now because of generally worse nutrition. There is an interesting blog post that discusses the height of Isaac Newton, based on an article by Milo Keynes called "The Personality of Isaac Newton". The article estimates that Newton stood 5 ft 6 inches tall and also discusses Newton's speculation as to the height of the Ancient Egyptians:

"Newton is trying to calculate the dimensions of an ancient building, and concludes that the 'ordinary stature of men' was about the same 3,000 years ago as it was in Newton's time, namely 5 feet 6 inches, which is exactly the estimate I have given for Newtn's own height.

Here are the quotations from Newton himself:

'The measures of Feet and Cubits now far exceed the proportion of human members; and yet Mr. Greaves shews from the Aegyptian monuments, that the human stature was about the same above 3000 years ago, as it is now ...

The stature of the human body, according to the Talmuidsts, contains about 3 Cubits from the feet to the head; and if the feet be raised, and the arms be lifted up, it will add one Cubit more, and contatin 4 Cubits. Now the ordinary stature of men, when they are bare-foot, is greater than 5 Roman Feet, and less than 6 Roman Feet, and it may be best fix'd at 5 Feet and a half.' "

Now, it is interesting that Newton mentions the Talmudists, by which I assume he mentions the compilers of the Talmud, which was finished around the year 500. Also, I would not expect the height of the Ancient Egyptians to deviate so much from the citizens of Roman Palestine, so 5 ft 6 inches is a good estimate for the mean male height in the time of Jesus.

In doing some research for this post, I found some people who have speculated that Zacchaeus actually had dwarfism, which in the present time is defined as a height below 4 ft 8 inches (142.24 cm). But I consider this unlikely.

If the standard deviation in the time of Jesus was the same as it is now in English speaking countries (which is an assumption; I am not sure whether this would be true in general), then we can estimate that Zacchaeus was less than 5 ft 3 inches (160 cm). I will estimate that since Zacchaeus had to climb a tree, rather than just stand at the edge of the crowd that his height was less than one standard deviation below the mean, but since he is not described as having dwarfism, probably above 5 ft. I will speculate that Zacchaeus was somewhere from 5ft to 5 ft 1 inches tall.

Quality of thought

At the end of Assistant Village Idiot's post Obama's IQ, he writes the following:

"Reaching much farther back, the ability to be competent in a range of subjects suggests that Thomas Jefferson and Theodore Roosevelt were likely of high intelligence. A few others showed such facility in writing and composition, even on highly abstract subjects, that they must surely have had sufficient wattage. Madison and John Adams come to mind there.

If one has an agenda about a president it is usually easy to 'prove' him stupid or brilliant by carefully selecting the data. Andrew Jackson may have been close to illiterate, so you can paint him a dull gray if you like. Yet he also had such a prodigiouis memory that when legislation was being discussed, he could recall every previous draft verbatim from what he had heard read out, sometimes over weeks. So bright colors there. It depends what you choose."

A commenter wrote:

"Herbert Hoover and his wife Lou translated Agricola's classic work on mining from Latin into English, just for fun."

What I find most noticeable about this analysis of US presidents is that there is a change from those presidents who were born before the age of mass media to those who were born after. As Assistant Village Idiot mentions, it is not straightforward to compare presidents from before the age of mass media with those after in terms of pure intellectual ability. However, we can compare them in terms of the character of their intellectual accomplishments.

There is a definite qualitative shift. Consider for instance Ignatius L. Donnelly, a US legislator both at the state and national level and most known for a book about Atlantis which theorized that the Biblical Deluge and the Atlantean catastrophe were the same thing. I have not read the book, so I cannot comment on that aspect, but the fact is that Donnelly wrote and researched the book himself, no ghostwriters, no use of books merely as a promotional tool.

The character of the thought of many individuals from before and after the age of mass media, especially with the rise of social media has undergone a definite change. It is not explicable in terms of intelligence both because of the speed of the change and also because with this change in the nature of thinking, high intelligence has been repurposed as efficient processing of media inputs.

Bruce Charlton has two posts from 2011 which describe this phenomenon well. One, titled "complexity of thought" says that too much time spent communicating (including taking in communications) diminishes time spent thinking and if the balance is too much in favor of communication, then complexity of thinking substantially diminishes

"This explains why the internet has not led to any advances in genuine understanding, since the millions-fold expansion in the amount of data (plus increased social interaction via electronic media, plus increased volume and usage of mass media) have led to an equally vast simplification of cognition.

The average modern human mind is now more like a relay station than a brain - performing just a few quick and simple processes on a truly massive flow-through of data.

This applies equally, or especially, to intellectuals who are plugged-into oceans of data in a way never before possible. When all (almost all) intellectual output is simply a summary of unassimilated input, as we see all around; then we can perceive that intellectual processing has become grossly simplified."

And it goes beyond simplicity and complexity. The question is whether one's mind is thinking and understanding on its own or just processing data from outside.

The other post "The hierarchy of authorities" compares the current situation to the great thinkers of the past:

"nobody really believes that a high school kid with Wikipedia at his fingertips, or a hotshot globetrotting research professor, actually 'know more' than Aristotle or Aquinas. Rather, students and academics now actually know almost-nothing, and are - presumably - stuffed and overflowing and mere-conduits-for billions of words, sounds and images of fashions, illusions, delusions, distortions and open-ended misunderstandings."

Consider Aristotle and Aquinas. They had both built up vast edifices of thought within their minds; combining both understanding and knowledge. A great part of their writings was simply communicating this thinking. Similarly, Porphyry writes how his teacher Plotinus did not write down any of his teaching until after teaching for ten years. In other words, the teaching was the understanding of Plotinus, given expression by the words he chose to express it at any given time.

What is possibility?

In a response to this post by Francis Berger, the commenter Tom uses an analogy which I found quite thought-provoking. He writes:

"What if Shakespeare had invented the English language? No one would have understood anything he wrote, that's what. It could be explained in the contex of another language, by taking the persistent elements of that language to explain Shakespeare's new one. Without that context it would be unintelligible nonesense, and arguably not creative at all.

...

The genius of Shakespeare is not that he invented the English language, but that he understood so well the pre-existing tools he had to work with that he was able to assemble them in brilliant new ways. So even the combinations no one had heard before his plays, like upstairs, were immediately intelligible."

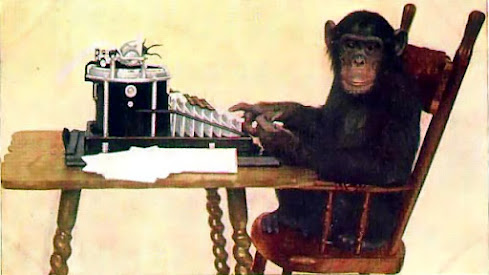

In what sense was Shakespeare creative? In theory his plays are just arrangements of letters, spacing, and punctuation. This has led to the famous thought-experiment that the text of the plays could be produced by a sufficiently large number of monkeys typing for a sufficient length of time.

And besides the text, what about the English language itself? Is it true that once the rules of the English language and the given words have been set up then speaking and writing are just picking combinations out of this vast universe of possibilities?

In terms of speaking and writing, this has been satisfactorily explained by pointing out that when humans make words and sentences, they are choosing to combine letters to communicate meaning. The meaning is what distinguishes any particular choice of speaking or writing from a random choice out of a universe of possibilities. This is because, from the perspective of randomly generated text or speech, meaning doesn't come into it. Even the fact that the words are in English is unimportant when considered purely in terms of permutatination of letters, spaces, and punctuation. It may as well be permutations of pebbles.

But when we go to bigger topics, we have to even go beyond that. I believe that the model of possibility as actualizing a universe of possibilities that are already "there" is unsatisfactory when coming to terms with creation and free will. (And this is true whether creation and free will are considered according to the traditional understanding or according to the pluralist understanding).

For instance, Leibniz famously believed that God chose to actualize the best of all possible worlds. But the key word here is "possible". When we think of possible worlds in practice we think of our world but modified in various ways, great or small. But what does possible world mean before any worlds are created?

Or in terms of free will, choices are sometimes conceived in terms of actualizing prior possibilities. But then this leads us to strange scenarios like the idea that whenever someone makes a choice, the universe splits and all the choices that weren't made happen in some other universe. And this comes from the idea that those choices are already "there" before they were made. While it is true that our actions are already constrained because the world is not made by us, I think there's still something more there.

Or also, the idea of the Great Chain of Being as something like a probability distribution of beings where God chooses which beings to actualize and which to leave uncreated. But then that leaves us in a strange situation: where are these uncreated beings? In fact, if God does create beings from nothing and it really is nothing, not even His thoughts (if such a thing be possible), then that might be one way that free will comes to exist.

In pluralist metaphysics, we are faced with the same issue. There are an indefinite number of beings, each unique. (On a side note, I will say that the base assumption of pluralism does not specify the characteristics of these beings; there may be many different genera that they fall into). But then what governs the characteristics of these beings - is it some sort of prior distribution of being or are all possibilities (whatever that would mean) expressed? I don't think either of those are satisfactory either.

The nature of possibility is something that human beings will never fully comprehend, but I believe that it is still worth thinking about. In order to understand creation and free will at a more fundamental level, we need a better understanding of possibility and in particular, we need to understand what it means for something to be truly new, not just selected from a universe of predetermined possibilities.

The three stages of Institutions

In the course of their decline, institutions go through three stages. In the first stage, institutions are a crystallization of a purpose or goal of a group. The institution is not separated from the underlying purpose and the understanding of that purpose.

In the second stage, the institution becomes dominated by rules. The formal rules which previously had taken second place to the underlying purpose now take precedence. This stage is the stage of legalism. If the original institution was good, those who made the rules were well motivated and further, if the rules themselves are good, clearly stated, and respected, then this stage may not be that bad. In fact, it may actually have much good. But the weakness is that when the rules have taken primacy, the institution can be manipulated by changing rules and procedures.

If this goes far enough, then we get to the third stage, when the institution is co-opted either to do something different from or even opposed to its original purpose. In this stage, the rules that previously were interpreted legalistically, but fairly, are now selectively (and dishonestly) interpreted to serve whatever the new agenda.

All institutions will not progress through these stages. Some may be simply be outright destroyed in the first two stages. Or an institution may be taken immediately over by force rather than gradually. Or an institution may be revitalized by recovering the original impulse or another purpose before reaching the third stage.

However, these three stages are useful because this is the path that the institutions in the West have followed over the past century (with roots going back before this).

An example of this in a concrete sense is education. For most of human history, education was in the first stage. People learned directly from family or community members or through apprenticeship or through schools that were directly connected to the purpose of passing down knowledge and values. For instance, schools in which teachers were directly paid by parents to teach children or schools connected to churches such as the Cathedral schools during the Middle Ages.

Then in the second stage, education was viewed as a "functional system", eventually becoming a free-floating system such that whatever happens in a building called a school is deemed education. And finally, we have the current stage where education is increasingly being used for cultural subversion, in direct opposition to its original purpose to pass on culture.

In a recent post, Bruce Charlton discusses alternative insitutions and the difficulty of building them under the current circumstances. If institutions are primarily institutions, then they will only succeed by luck. In other words, if people believe that rules, procedures, and techniques alone will prevent institutions from being co-opted, then this is a mistake. Rules, procedures, and techniques can be helpful so long as they are at the service of the original purpose and the people in the institution stay true to that purpose.

Self-Organization or Creation?

For many decades, there has been wide belief that society organizes itself. That given facts about human nature, society will just fall into a certain pattern. And by many these were considered the best kinds of explanations, that to really understand large-scale human behavior, one should look towards these principles of self-organization, which are laws like any law of physics.

However, from the perspective of the past two years, seeing that things can change so suddenly and so completely, and looking back and seeing the deterioration that made the birdemic and related events possible, it is clear that these ideas are far from universally applicable. Not that bottom up organization does not exist, but that it is dependent upon the people who so organize themselves. If the people change, if the units which make up the larger group change, then the organization of that group also changes.

It turns out that the traditional means of explaining human behavior by purpose and and understanding is more fundamental than systems-type explanations.

But even given all this, what if there is more to the analogy with physics? One of the most important metaphysical principles is that nothing can give what it does not have. If society doesn't "just happen", then we might ask whether likewise, the universe doesn't "just happen"? That while there is self-organization, other things are going on beyond our knowledge. And in that case, it would be better to think of it as being created than being self-organized.

True symbols can be obscured but not corrupted

Two well-known symbols used in the present day, one overtly, the other less so are the rainbow and the five-pointed star. But both of these symbols have a quite different origin.

The rainbow has been used as a religious symbol as far back as the book of Genesis, which reads (9: 12-15):

"And God said: This is the sign of the covenant which I will give between me and you, and to every living soul that is with you, for perpetual generations. I will set my bow in the clouds, and it shall be the sign of a covenenat between me, and between the earth. And when I shall cover the sky with clouds, my bow shall appear in the clouds: And I will remember my convenant with you, and with every living soul that beareth flesh: and there shall no more be waters of a flood to destroy all flesh."

The pentangle is also both a Christian symbol (in particular in the medieval poem Sir Gawain and the Green Knight), relating to the five senses, the five wounds of Jesus, the five joys of Mary, and the five virtues of knighthood. The pentangle was also used by the Pythagoreans, who, although they have been unfairly maligned as either a cult or a political cabal, were a force for good in the world, in particular through the purity and austerity of their lives.

But notice that when the Establishment uses these symbols, they have to change them. In the Infogalactic article on the subject, there is a picture of both a Pythagorean pentangle and a pentagram design by Aleister Crowley. They look completely different. Likewise, the symbol being foisted upon us now does not even look like or have the same colors as a rainbow occurring after rain.

That suggests that true symbols have a quality of their own which cannot be corrupted, only obscured. And for that reason we should treasure the true symbols and ignore the fakes.

(William Wildbood and William James Tychonievich have also written posts on this topic, about how the rainbow as a symbol has its own significance).

The two questions of AI

This post is inspired by a recent Orthosphere post on the Turing test as well as the discussion in the comments. I also read Turing's 1950 paper "Computing Machinery and Intelligence" to see how he considered this issue.

The question of whether a machine can think involves two questions. Although these are related, it is worth distinguishing them for the sake of clarity in thinking. The first is the theoretical question: Is it possible for humans (or perhaps some other species) to make a machine that can think? In asking this question, I am using thinking as it is generally understood, in that thinking requires consciousness. Furthermore, it may also be that all consciousness carries with it some degree of free will, so any conscious machine also is has free will and can be autonomous in its actions.

This question has two parts. First, whether it is possible at all. Second, whether any human being will ever be able to figure out how to do so. It may be that there is a method for making conscious artifacts but no human being will ever have the intelligence, creativity, and understanding to discover it. As to whether it is possible at all, a common response is to flippantly say: "Humans are machines and we think, so it must be possible." But this statement already begs the question. It is better to say: "We know that mind and matter can occur together in humans and animals, so it may be possible for artifacts."

As to whether this is actually possible, it's completely unknown. We don't know how mind and matter connect, so we do not know how to bring about such a connection. We do not know what method, if any, would work; however, we can rule out known methods. In particular, computation is not sufficient to bring about consciousness. Computation is simply rule-following; it is lesser than consciousness: a conscious human being can generate computations (by doing an arithmetic problem, for instance), but computation alone does not generate consciousness.

This brings us to the second question, which is the practical issue: to what extent can human beings make machines that can imitate human behavior, regardless of whether the machines are conscious or not?

The answer to this question is also unknown. We do not know the limits of human inventiveness and we do not know all possible methods by which human behaviour might be imitated by machines, so it is not possible to answer the question in general.

By distinguishing these two questions, we can see that there are two distinct approaches to artificial intelligence. Those interested in the first question are primarily those interested in philosophy, in understanding consciousness as it is in itself, not how it can be redefined as part of a current research program.

On the other hand, I would estimate that the majority of AI enthusiasts are primarily interested in the second question. Their goal is to make more powerful computers and to make computers that can perform more tasks. They are not really interested in the philosophical issue.

And this makes sense because the question of consciousness is not directly related to making machines imitate human behavior or increase in computational power. There are animals that live in remote places and hardly interact with humans. These animals are conscious and it may well be that someone discovers a means endow a machine with a consciousness remote from human concerns, as these animals have. Also, consciousness and computational power do not inherently go together.

Metaphysical Convergence

Metaphysical convergence is when the same conclusion or idea occurs in different metaphysical systems but is reached by a derivation from different sets of principles. I chose convergence because of the similarity to convergent evolution, when species evolve similar features, though they are not closely related in terms of a recent common ancestor.

In a post at the end of October, "Man and woman is primary - masculine and feminine are secondary abstractions" Bruce Charlton writes:

"the soul of a Man is either a man's or a woman's soul. This is a fact that carries-through whatever happens in mortal life - which carries through attributes, biology, psychology and social roles."

I commented, saying that Virgil seems to have thought something similar because in the Aeneid, Aeneas encounters the soul of Caeneus in the underworld:

"Caeneus, once a youth, now a woman, and again turned back by Fate into her form of old."

Although Caeneus turned into a man physically, the soul was a woman's soul.

Bruce Charlton responded by saying:

"Well, Virgil certainly did not have the metaphysical assumptions which I do. Presumably this is a specific coincidence of conclusions, rather than the same baseline reality."

And this is an interesting fact if you think about it. Here we have two fairly different metaphysical foundations giving the same conclusion.

One way I find this helpful to think about is by drawing connections to mathematics. For instance, it is well-known that in addition to the familiar Euclidean geometry, there is also Non-Euclidean geometry, which was discovered when it was realized that the parallel postulate could be replaced with two different postulates, each of which gave consistent geometries. But in addition, there is also absolute geometry, which consists of those geometric facts which do not depend on the parallel postulate for their proof and hence are true in both Euclidean and Non-Euclidean geometry.

The analogy is that we may have a set of metaphysical assumptions where if one or more are changed, then we can derive completely a different metaphysics from the new assumptions. This is expected, but what is also interesting is that there may be some conclusions that hold between both sets of assumptions.

Another situation that might happen is when different metaphysical systems give different justifications for the same conclusions. They both reach the same place by a different route.

Also interesting is, the parallel postulate article lists many statements that are mathematically equivalent to the parallel postulate. Mathematically equivalent does not necessarily mean that they are saying exactly the same thing, but rather means that given the parallel postulate and the rest of the Euclidean axioms, one can prove the equivalent statement. And, conversely, given the equivalent statement, and the other axioms, one can derive the parallel postulate. Two mathematically equivalent statements stand or fall together, if one is true, then so is the other and if one is false, then the other is as well.

And there may be something similar in metaphysics as well, metaphysical assumptions that also stand or fall together.

Another possibility is metaphysical independence. Just as the parallel postulate is independent of the other axioms of Euclidean geometry, that is, they can neither prove it nor disprove it, there may be questions that can be asked within any particular metaphysics that can neither be concluded true nor false within this metaphysics. More assumptions are needed.

One could call the study of different metaphysical systems and how they relate meta-metaphysics, perhaps.

I do not have any particular thoughts on these matters in this post, but I believe that thinking about these kinds of things could be useful. I am curious if any readers have any thoughts about or examples of metaphysical convergence or other matters in meta-metaphysics.

What does it mean to consider the spiritual?

What does it mean that we should consider the spiritual as well as the material? This is a question that we must face in the present era. It is not an abstract problem, where we can simply solve it and write down the answer. It is a challenge that we have to face, both in thinking and in doing. In this post I want to write down some thoughts about this issue.

I'll start with what considering the spiritual does not mean.

Even though the word "spiritual" has been misused, "consider the spiritual" can't be an indirect way to say "do nothing". This is because the spiritual includes the material. In a recent post, Bruce Charlton expresses this point:

"Everything material is also spiritual. Therefore, all our material actions or behaviors, every-thing that happens in the material realm, has spiritual implications.

...

The material is a 'sub-set' of the spiritual."

Now, it may be that any particular person, due to their station in life or a special vocation may limit their sphere of activity in the material world. An example is the case of Sister Andre Randon, who at 117 years old survived the birdemic at the beginning of this year. She said: "No, I wasn't scared because I wasn't scared to die ... I'm happy to be with you, but I would wish to be somewhere else - join my big brother and grandfather and my grandmother."

As one would expect from a 117 year old nun, Randon recognizes that she is at the end of her life and is no doubt preparing for what comes next. In Randon's case, due to her age her activity in the material world is going to be rather restricted. Another possible example would be a monk who lives as a hermit and spends all day in prayer.

But in neither of these cases (or similar situations) are instances of doing nothing; rather they represent people who operate in a restricted sphere in the worldly sense, either by choice or necessity. However, the actions of such people do have spiritual implications, even though these may not be readily apparent.

As far as what it "consider the spiritual" does mean, in the broadest sense, it means to recognize that the world consists of a greater reality than the material and respond accordingly.

One aspect of this is that certain actions are off the table. Recognizing the spiritual nature of reality means recognizing that there is a moral law, so to do evil, even it would benefit us, even if (we think) it would benefit vast number of other people is forbidden. "What does it profit a man to gain the whole world, yet forfeit his soul?" (Mark 8:36).

Another aspect of the "practical application" of recognizing the spiritual is that we are better able to understand what is going on. As Chesterton noticed in his day, and even more so now, it is easy to have no higher perspective than adapt to whatever seems to be happening. But, by the use of spiritual principles, once can evaluate trends according to how they correspond to actual reality, not virtual reality and thereby avoid being taken in by them.

In addition to avoiding what is bad, one can also perceive what is good. Now, this is not easy by any means. However, since the spiritual is bigger than the material, we are not limited to our own plans and what we can think up. The spiritual can take up many different things, and they can unfold in an unexpected manner, providentially. Bruce Charlton writes in a recent post:

"I am currently thinking much about divine providence ...

This is - of course - how Jesus told us all to live in the Gospels ('consider the lilies' etc) - which is not to ignore the future, nor to live unthinkingly or in denial of reality; but to do the right things (one at a time, as they arise and not because they are part of a strategy) and trust to God to organize matters for the best.

God does this positively and negatively.

Positively by weaving-together the work of all Men who do good (and doing includes thinking).

Such positive divine providence is shown at work in The Lord of the Rings where the free choices of the characers lead to positive unforseen (and unforseeable - even by the wisest such as Gandalf, Elrond and Galadriel) outcomes."

And the fact that such things are unforseeable and difficult to articulate is not a fault of the spiritual. In fact, it is not just the spiritual where this occurs. In general, if something really is different and really is unlike what we are used to, then before seeing it it is difficult to describe it. And even after encountering something like this, it may take some time to get used to it and to understand it. Tom Shippey gave an example of this phenomenon in his book J.R.R. Tolkien: Author of the Century, where he quotes book critics saying things like: "The next type of literature will be completely different. It will not just be a variation on what we know, but something entirely unfamiliar." And then quotes those same critics disparaging the Lord of the Rings in their reviews. The new literature really was completely different, but even some of those who correctly predicted this were not able to appreciate it.

And so, considering the spiritual means considering reality as a whole. Our task in this era is to do this, even while living in a despiritualized world. Though putting it into practice is the work of a lifetime.

The real AI agenda

On a post by Wm Briggs, about artificial intelligence, a commenter with the monniker "ItsAllBullshit" writes: ...

-

I believe in the evolutionary development of consciousness, meaning that human consciousness has changed and evolved over time. Further...

-

On a post by Wm Briggs, about artificial intelligence, a commenter with the monniker "ItsAllBullshit" writes: ...